Kubernetes

Cost Optimization

Optimize the resources and cost the cluster, node, workload and level.

Intelligent Workload Rightsizing

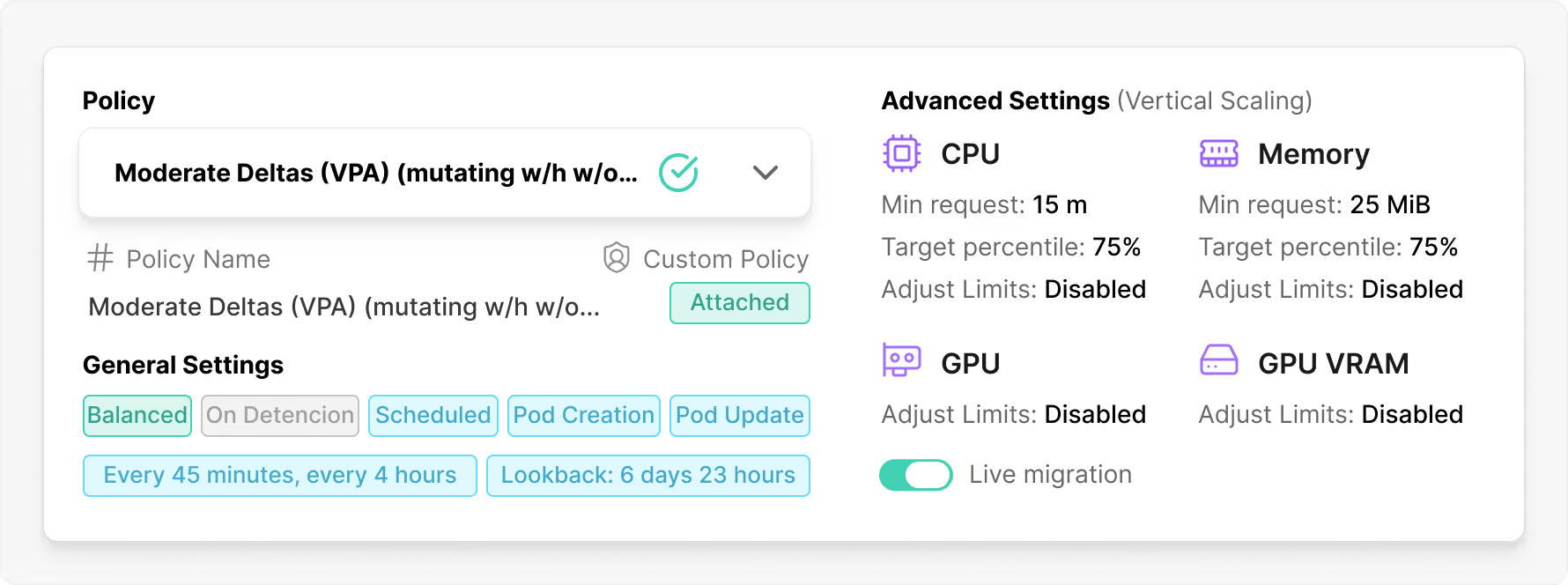

Traditional Kubernetes requires manual resource requests and limits. You overprovision for peak loads, then pay for idle capacity 80% of the time. DevZero fixes this with live rightsizing—no pod restarts, no downtime.

DevZero uses XGBoost forecasting to predict future resource needs, avoiding inflated baselines for workloads that spike at startup. Optimization modes can be set per cluster, node pool, or workload:

- Statistical: Steady, low-churn adjustments

- Predictive: ML-driven aggressive cost reduction

The platform monitors OOM errors, pod failures, and memory pressure, ensuring stability. Resources scale up during spikes and down when idle—instantly.

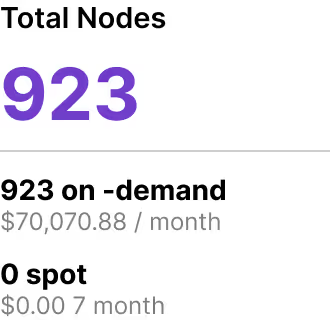

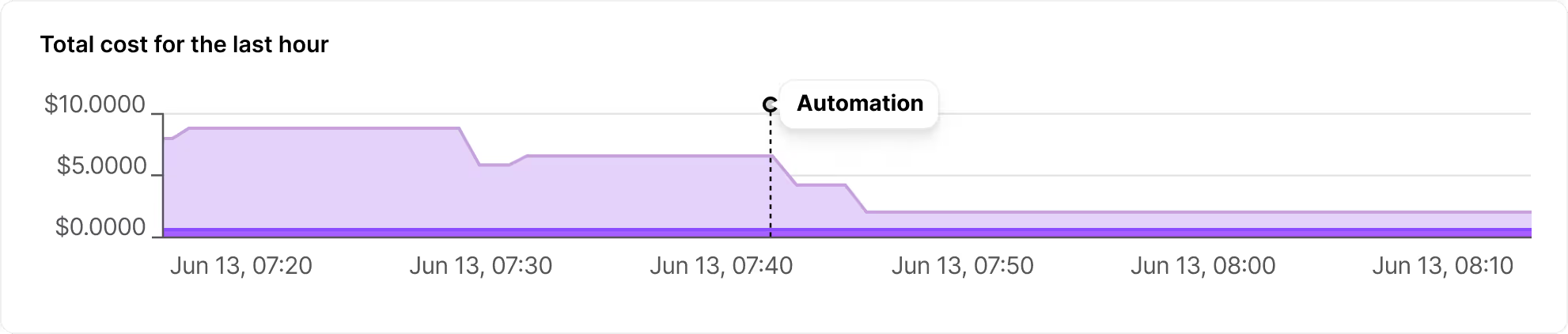

Cost-Based Autoscaler

DevZero integrates with HPA, VPA, and Karpenter—it doesn't replace them. Instead, it adds a predictive layer that makes smarter, cost-aware decisions.

Most autoscalers react to past usage, but DevZero predicts future demand. It handles bursty workloads such as CI pipelines, LLM inference, and memory-fluctuating JVM apps by analyzing CPU, memory, request patterns, and cost. Scaling is optimized to avoid VPA and HPA conflicts, preventing resource thrashing and cascading evictions.

You set policies. DevZero executes them intelligently. The system learns your workload patterns and gets more accurate over time. You maintain visibility and control while eliminating manual intervention.

Node Optimization and Bin-packing

Kubernetes distributes pods fairly, not efficiently. Nodes run at 30-40% capacity while you pay for 100%. DevZero fixes this with intelligent bin packing and true zero-downtime migration.

Other platforms restart workloads during migration. DevZero uses CRIU to snapshot and instantly resume them. What's preserved:

- Memory & process state

- TCP connections

- Filesystem

- Session state

Migrate anytime—no downtime, cold starts, or drops.

DevZero compacts pods onto fewer nodes, removing idle ones for max density and zero waste.

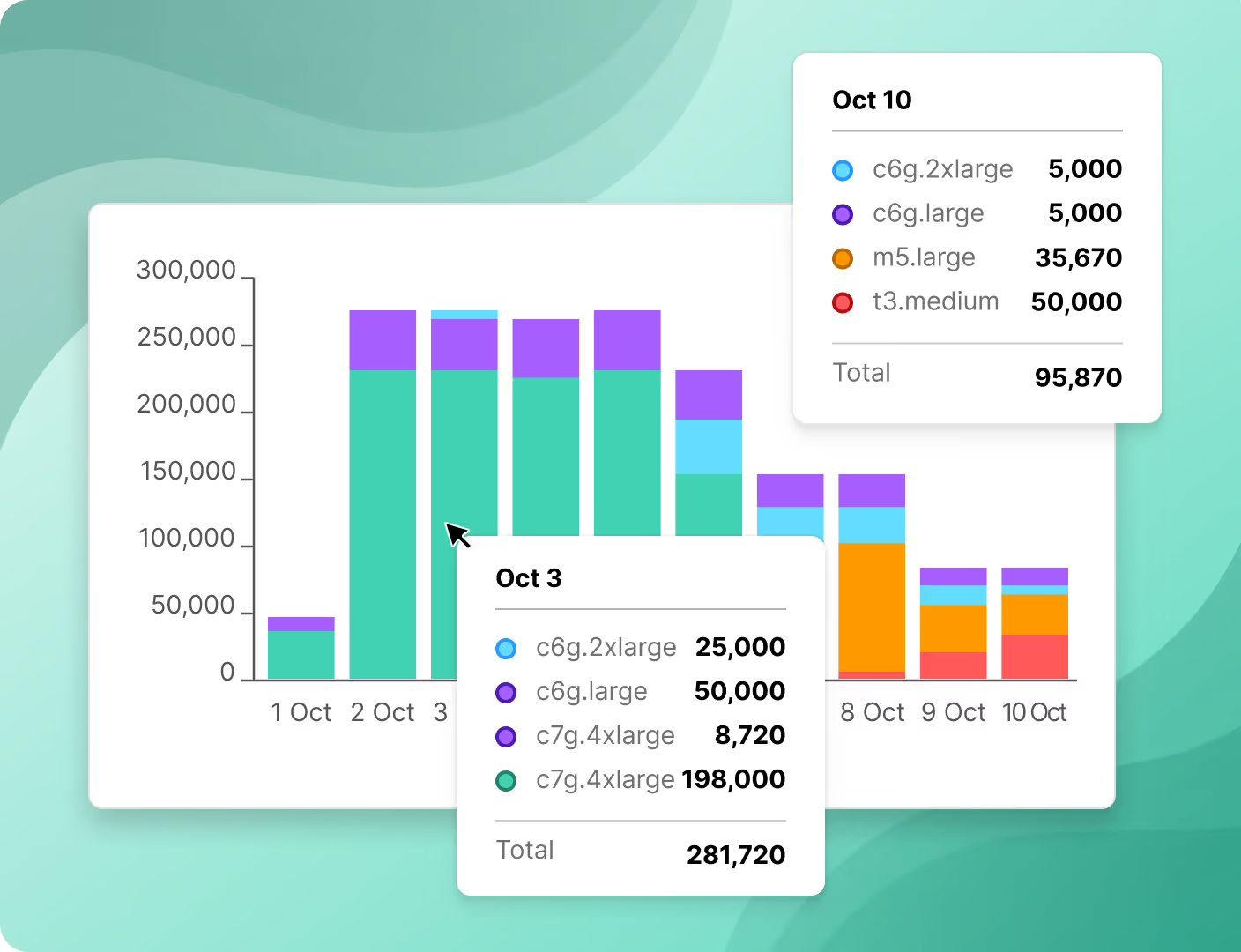

Intelligent Instance Selection

Choosing the right instance type is complex. Compute-optimized? Memory-optimized? Spot or on-demand? Multiply this across regions, AZs, and workload types—manual management is impossible.

DevZero selects the most cost-efficient instance in real time. The algorithm considers:

- Current pricing across regions and AZs

- Spot availability and interruption patterns

- RI/Savings Plan utilization

- Workload-specific requirements

As workloads evolve, DevZero uses CRIU to migrate with zero downtime—batch jobs to spot instances, memory-heavy apps to optimized nodes. Works with Karpenter to anticipate demand and optimize cost and performance.

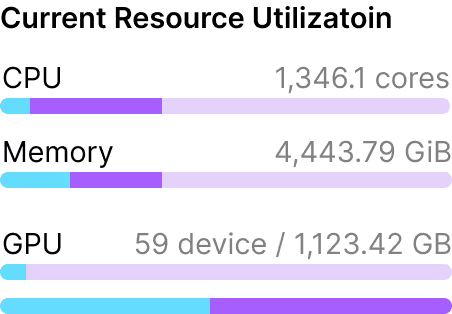

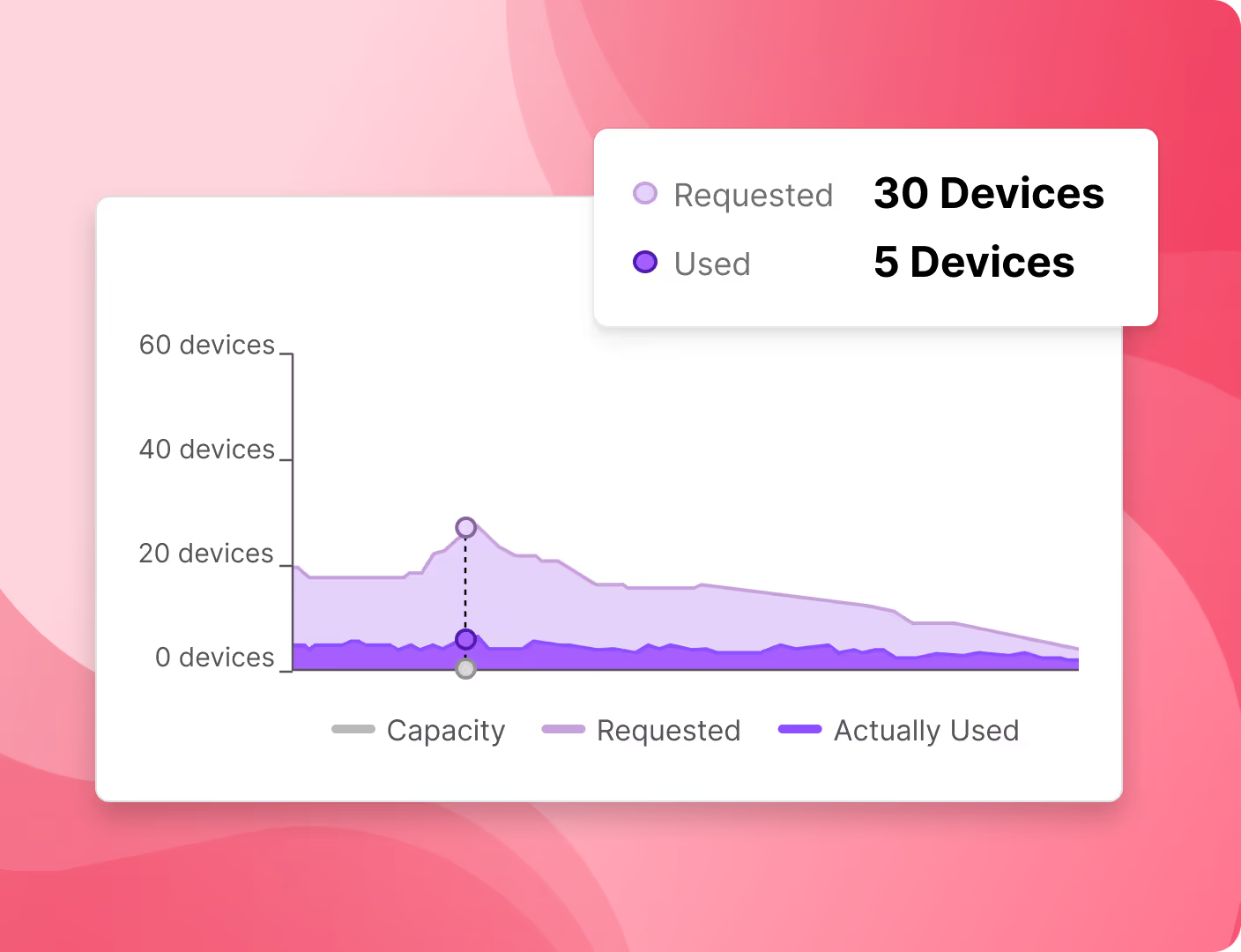

GPU Optimization

GPU resources are costly and often underutilized. Teams overprovision for peaks; actual usage is 20–30%, costs soar.

DevZero provides true workload-level GPU optimization—not just node-level scaling. The platform monitors actual GPU utilization and dynamically adjusts allocations based on real-time and predicted demand.Predictive scaling aligns GPU instances with projected demand, not static metrics. Critical for model training, inference, and data processing workloads.

How it Works

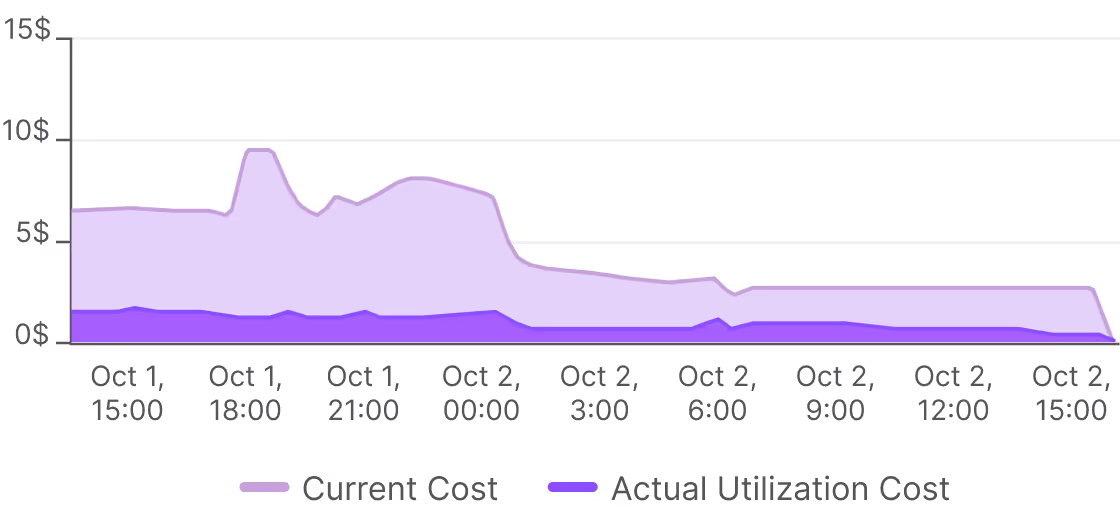

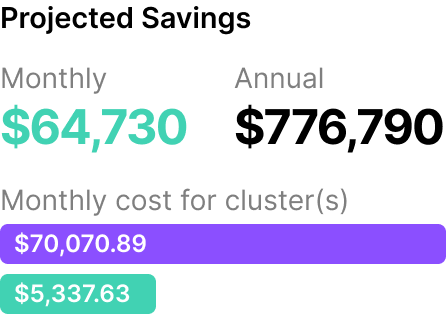

Cut Kubernetes Costs with Smarter Resource Optimization

DevZero boosts Kubernetes efficiency with live rightsizing, auto instance selection, and adaptive scaling. No app changes—just better bin packing, higher node use, and real savings.

Frequently asked questions

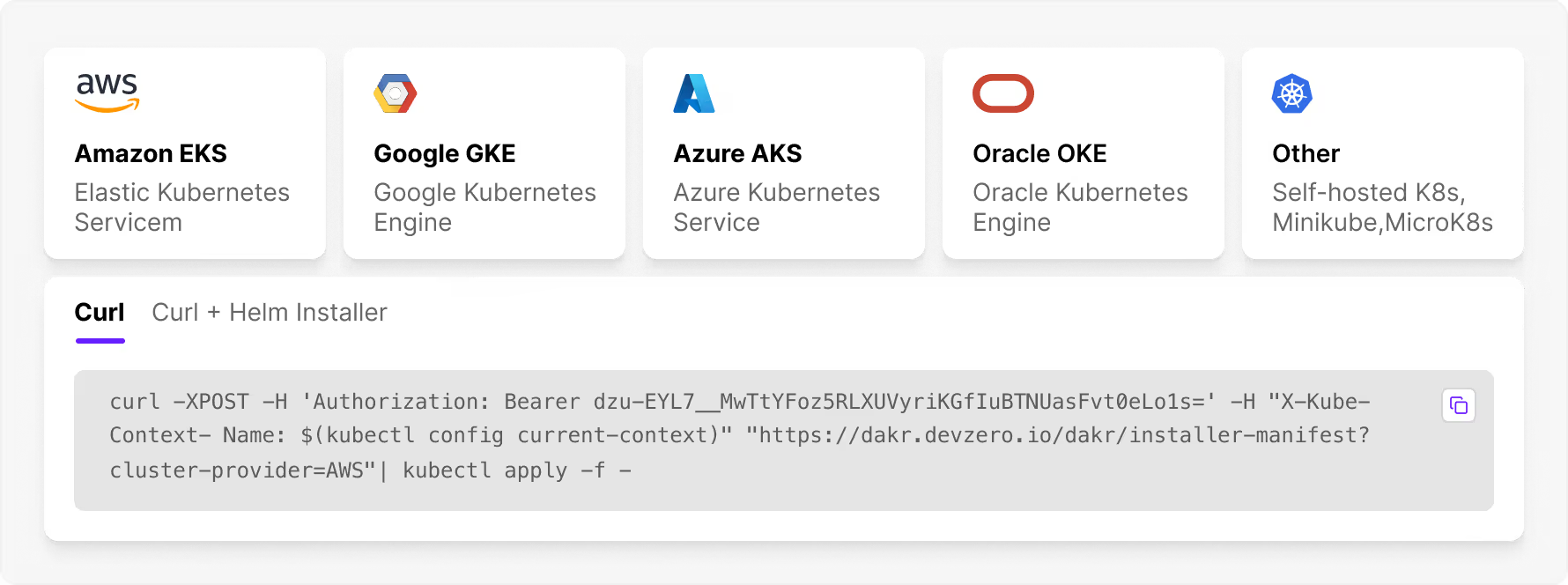

DevZero installs in under 30 seconds with a single command. Our lightweight operator immediately begins collecting telemetry data from your cluster, giving you instant visibility into resource usage and optimization opportunities. No complex configuration or infrastructure changes are required.

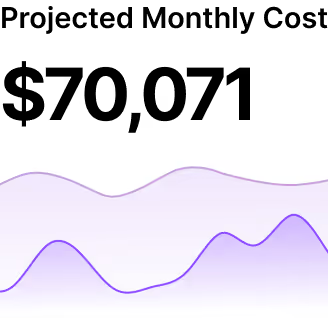

DevZero is a Kubernetes cost optimization platform that uses AI-driven automation to reduce cloud spending by 40-70%. It continuously rightsizes workloads, optimizes nodes, and intelligently selects instance types, all without requiring any changes to your applications or restarting your services.

DevZero supports Amazon Elastic Kubernetes Service (EKS), Google Kubernetes Engine (GKE), Azure Kubernetes Service (AKS), Oracle’s cloud platform (OCI), as well as on-prem Kubernetes implementations.

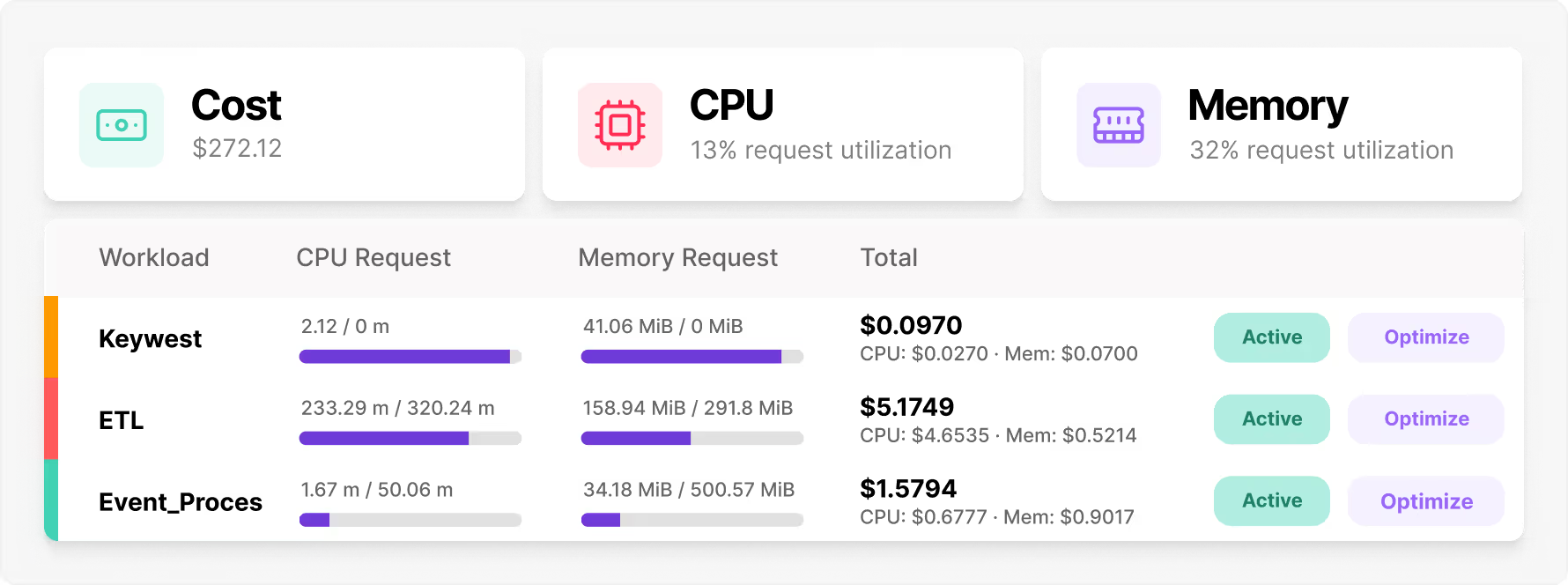

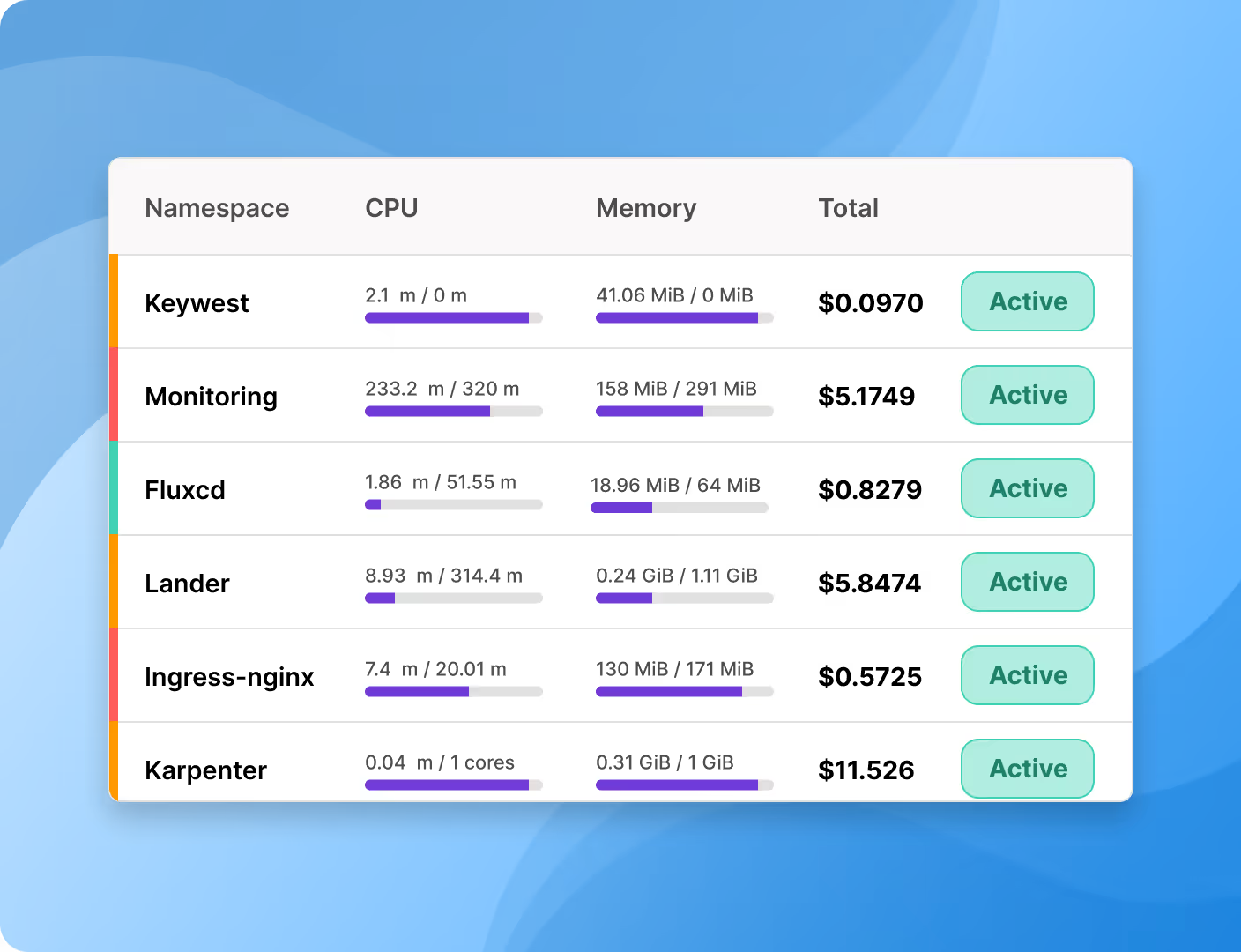

AWS Cost Explorer, Azure Cost Management, and GCP's billing tools show node level costs. DevZero shows Kubernetes native costs by pod, deployment, namespace, and workload. You get the granularity needed for containerized environments.

DevZero complements rather than replaces autoscalers like Karpenter and Cluster Autoscaler. While those tools focus on node-level scaling, DevZero operates at three levels: cluster, node, and individual workload optimization. Many DevZero customers actually use DevZero alongside Karpenter or KEDA. Our casse studies show an 80% reduction in cost even for customers using these autoscalers.

DevZero operators only gather resource utilization data, specifically compute, memory and network, as well as workload names and type. We do not have access to logs or application specific data. Moreover, our cost monitoring operator is read-only.

Absolutely. DevZero is specifically designed to handle GPU-intensive workloads, ensuring even your most demanding AI jobs stay live, adaptive, and continuously available through live migration technology. Unlike basic autoscalers, DevZero provides specialized optimization for GPU workloads including intelligent scheduling, utilization monitoring, and can achieve 40-70% cost reduction specifically for GPU infrastructure. The platform dynamically adjusts GPU allocations based on actual usage patterns, preventing the common problem of provisioning 8-12 GPUs when actual utilization is less than one GPU.

No, Karpenter has no visibility into your AWS Reserved Instances (RIs) or Savings Plans commitments. It makes provisioning decisions based solely on capacity requirements and basic cost preferences (spot vs. on-demand), not your actual pricing agreements.This creates a common problem: Karpenter might provision spot instances or on-demand capacity while you have unused RI capacity sitting idle, meaning you pay for both. For organizations with significant RI or Savings Plan commitments, this can negate much of Karpenter's cost savings. DevZero is cost aware and prioritizes using your existing commitments first, then fills remaining capacity with spot or on-demand instances. This approach typically reduces cost by 70%-80% compared to using Karpenter